Introductory summary

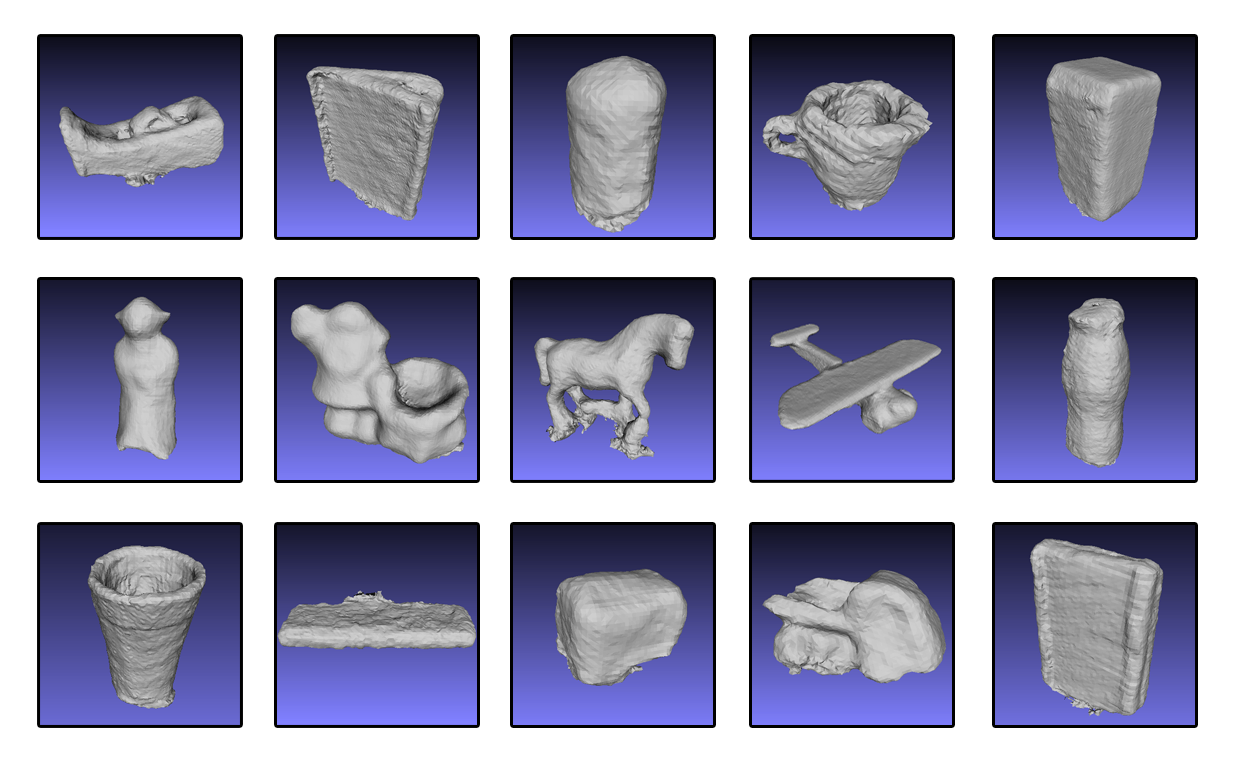

The objective of this SHREC track is to retrieve 3D models captured with a commodity low-cost depth scanner. This dataset, comprised by 192 models, was captured with the Microsoft Kinect camera. As can be seen by the sample presented in the picture above, these models are much rougher than the standard meshes presented in the previous editions of the Shape Retrieval Contest.

The advent of low-cost scanners in the consumer market, such as the Microsoft Kinect, has made this technology available to the everyday user. While designed for a different purpose, such devices have proven to be able to digitize 3D objects with acceptable quality, at least considering a myriad of contexts where before the presence of 3D capturing devices was virtually null. As a result, the proliferation of 3D models in the Internet is growing and expected to keep growing as new and innovative ways of capture and sharing 3D information are trusted to develop in the future, taking advantage of cheaper and powerful technology.

Task description

In response to a given set of queries, the task is to evaluate similarity scores with the target models and return an ordered ranked list along with the similarity scores for each query. The query set is a subset of the larger collection.

Ranked lists

Each file representing an evaluation should be named %id-%query where %id is the number of the run and %query is the identifier of the object representing the query. Please submit the results on any ASCII file format such as '.res'.

Example (for a faux query 144.off):

144 1.00000

24 0.87221

45 0.79915

201 0.59102

203 0.54902

32 0.51241

...(etc)...

This would output in a file named, for instance 1-144.res

Data set

The collection is composed of 192 scanned models, which were acquired through the real-time capture of 224 collected objects. Of these, 32 were rejected due to low quality or material incompability. The range images are captured using a Microsoft Kinect camera. The collection is presented in three different ASCII file formats: PLY, OFF and STL, representing the scans in a triangular mesh.

The collection itself is uncategorized. The rankings are to be compared against a human-generated ground truth created with a group of 20 subjects.

A test set is available here: here

Evaluation Methodology

We will employ the following evaluation measures: Precision-Recall curve; E-Measure; Discounted Cumulative Gain; Nearest Neighbor, First-Tier (Tier1) and Second-Tier (Tier2).

Procedure

The following list is a description of the activities:

-

The participants must register by sending a message to shrec@3dorus.ist.utl.pt. Already registered participants do not need to re-apply.

-

The database will be made available via this website in password protected zip files. Participants will receive the password by e-mail, along with a list of instructions. The set will be available in any of three file formats (PLY/OFF/STL) starting on the 11th of February and until the 15th.

-

Participants will submit the ranked lists and similarity scores for the query set up to the 15th of February. Up to 5 ranked lists per query set may be submitted, resulting from different runs. Each run may be a different algorithm, or a different parameter setting. We ask the participants to provide the binary program that generated the results. If more than one executable or a different parametrization is used, we also ask the submission of appropriate documentation along with a one page summary of the proposed method.

-

The evaluations will be done automatically.

-

The organization will release the evaluation scores of all the runs.

-

The participants write a one page description of their method and commenting the evaluation results with two figures.

-

The track results are combined into a joint paper, published in the proceedings of the Eurographics Workshop on 3D Object Retrieval.

- The description of the tracks and their results are presented at the Eurographics Workshop on 3D Object Retrieval (May 11, 2013).

Schedule

| February 7 | - A test set will be available on line. This will be a subset of the final set. |

| February 11 | - Please register before this date. |

| February 11 | - Distribution of the final query sets. Participants can start the retrieval. |

| February 18 | - Submission of results (ranked lists) and a one page description of their method(s). |

| February 20 | - Distribution of relevance judgments (Human-generated) and evaluation scores. |

| February 25 | - Submission of final descriptions (two page) for the contest proceedings. |

| February 28 | - Track is finished, and results are ready for inclusion in a track report |

| March 29 | - Camera ready track papers submitted for printing |

| May 11 | - EUROGRAPHICS Workshop on 3D Object Retrieval including SHREC'2013 |

Any further questions can be sent to shrec@3dorus.ist.utl.pt